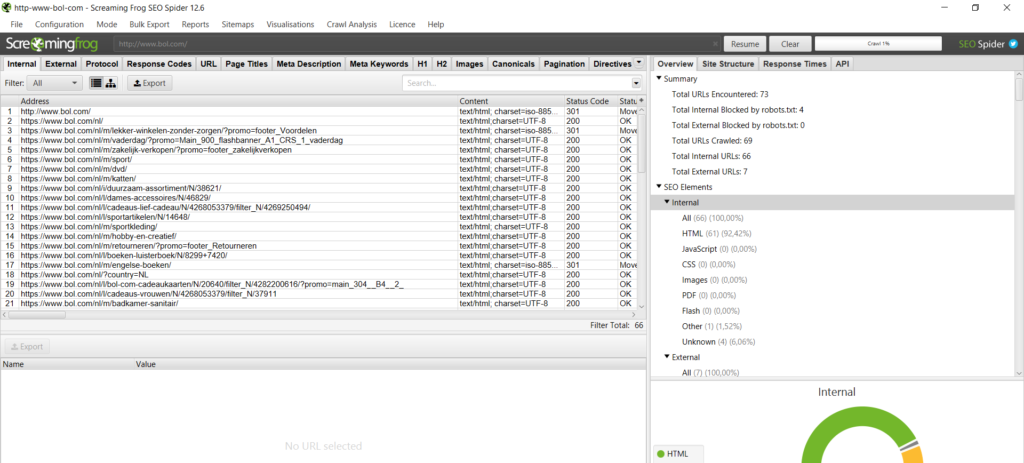

- SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE FOR FREE

- SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE HOW TO

- SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE INSTALL

If you created a new Google Cloud project for this guide, it can help to visit the Google Compute Engine overview page of your project in a web browser to automatically enable all necessary APIs to perform the tasks below.

SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE INSTALL

Second, you will need a Google Cloud account, enable billing on this account, create a Google Cloud project and install the gcloud command line tool locally on your Linux, macOS or Windows operating system.

SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE HOW TO

It is very useful if you have some experience and knowledge on how to access your terminal/command line interface on your operating system.

SCREAMING FROG SEO SPIDER TOOL CRAWLER SOFTWARE FOR FREE

In case of doubt, or if you want to install Linux locally when you are on Windows, you can install different versions of Linux for free from the official Windows Store, for example Ubuntu 18.04 LTS. However, most of the commands work locally the same with maybe minor tweaks on Windows and/or macOS. Dependenciesīefore this guide continues, there are a few points that need to be addressed first.įirst, the commands in this guide are written as if your primary local operating system is a Linux distribution. If this does not work, or to better understand how to setup the remote instance, transfer data, schedule crawls and keeping your crawl running when you are not logged into the remote instance, continue reading. Wget -O install.sh & chmod +x install.sh & source. Google Cloud, and you just want to download, install and/or update Screaming Frog SEO Spider on the remote instance in a jiffy then you can skip most of this guide by just logging into the remote instance and issue the following one-line command in the terminal on the remote instance:

To have your own personal in-house SEO dashboard from repeat crawls.To create XML Sitemaps using daily scheduled crawls and automatically make these available publicly for search bots to use when crawling and indexing your website.In this guide I will be combining three distinct tools and utilize the power of a major cloud provider (Google Cloud), with a leading open source operating system and software (Ubuntu) and a crawl analysis tool (Screaming Frog SEO Spider).Įxamples of solutions this powerful combination can bring to the table are:

And by combining some of these tools not only can we address the challenges we face, we can create new solutions and take our SEO to the next level. Advanced technical SEO is not without its challenges, but luckily there are many tools in the market we can use.

0 kommentar(er)

0 kommentar(er)